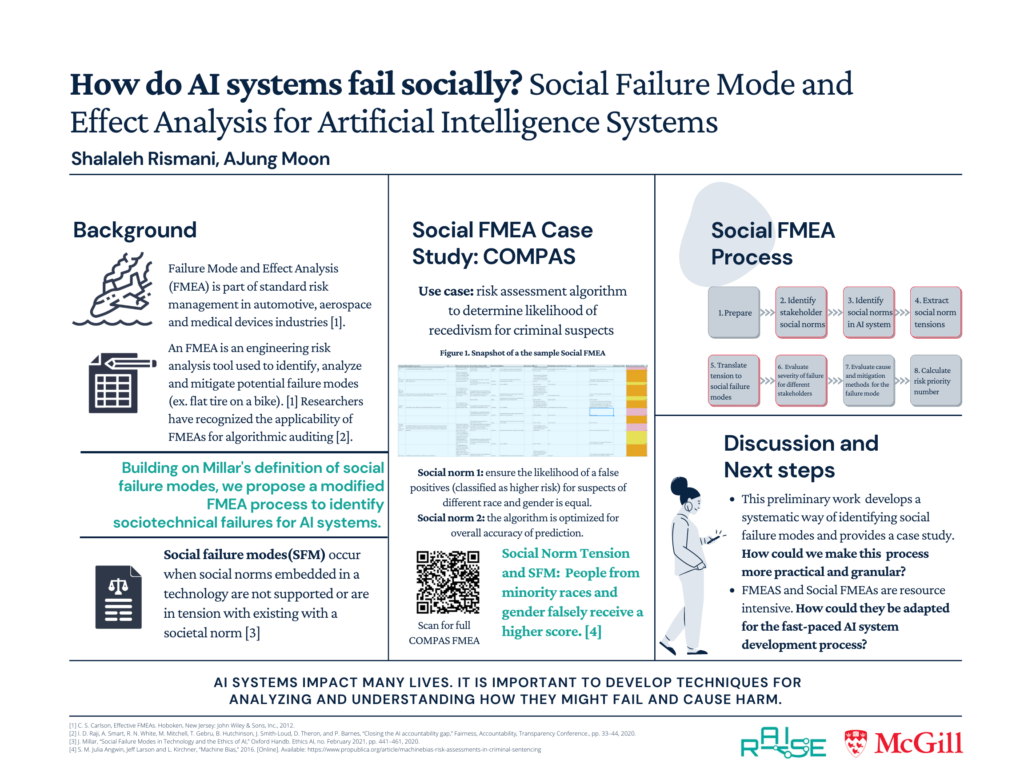

How do AI systems fail socially?

Researchers are actively proposing and testing solutions for accountability such as algorithmic auditing, impact assessments, and standards. Standard engineering risk management tools have drastically improved safety in the automotive, aerospace and medical device industries. This research takes a step in investigating how the AIS community can meaningfully adopt such tools. In this work, we build on some of the existing proposals and investigate how Failure Mode and Effect Analysis (FMEA), a well-established engineering risk assessment tool, could be used to identify sociotechnical failures for AIS preemptively.

Our open-ended research question is: how can developers use FMEAs as one of the tools for creating accountability and improving design for sociotechnical failures?

To view a sample application of Social FMEA for AI systems click here.

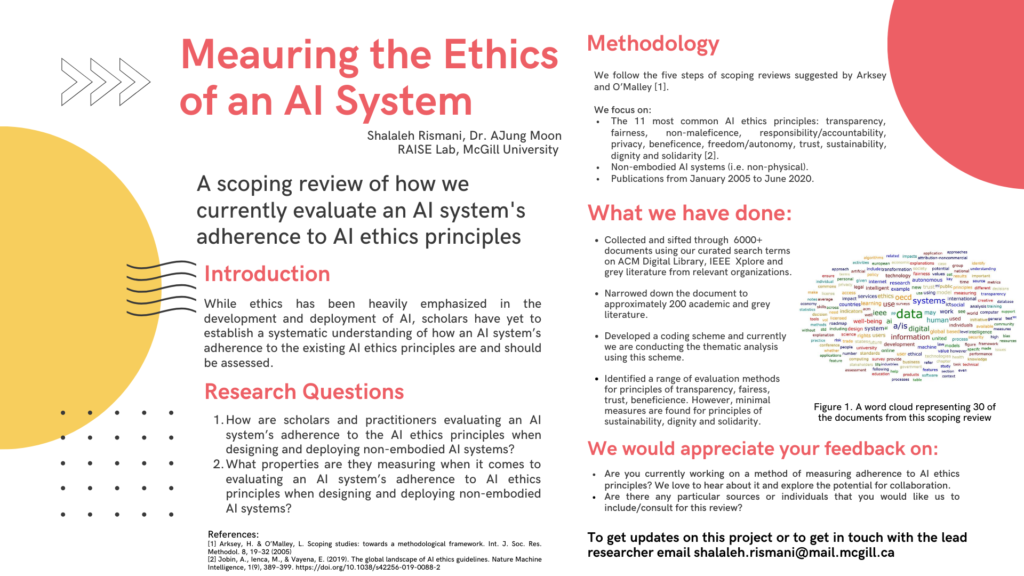

Measuring adherence to AI ethics principles

Over the past few years, many AI ethics principles have been developed. However, research on how we should be measuring an AI system’s adherence to these principles is sparse. In this work, we want to identify the existing methods for evaluating the adherence of an AI system to the eleven most common AI ethics principles.

To do this I am conducting a scoping review of academic and grey lit. From this corpus, I am conducting a thematic analysis of the most relevant 200 documents. So far, a range of attempts has been made to evaluate adherence to the principles of transparency, fairness, trust and beneficence. However, minimal attention has been paid to principles of solidarity, sustainability and dignity.

I would appreciate your feedback/insight on this research and would love to hear if you have done any related work.